Task Assessment Redesign

Task Assessment Dashboard: Delivering 80% Faster Feedback

Timeline

July’23 - Aug ‘23

People involved

Product Mangers, Product Designer, Dev (Front end, Back end), Course Development Team, Operations Team.

Inefficient task assessment processes within Novatr lead to delayed feedback, slowing learner progress. Implementing systemic changes including notifications, an efficient feedback rubric, and a revamped UI resulted in an 80% reduction in assessment times, decreasing from 24 hours to 4 hours.

Overview

Novatr creates professional courses for Architects and Civil Engineers, one of the major mode of education in these course is Assignment or Tasks, after a learner submits any of these tasks it needs a manual Review or Assessment. this evaluation is done by teaching assistant who goes by the title IG (Industry Guide).

Before this project IGs were usually taking 24 hours on an average to assess a task, which is quite slow as it impediments learning on a weekly basis.

Why Low TAT matters?

for Learners

It allows learners to improve themselves more quickly and continue with their learning activities. Sooner is better.

for Users(IGs)

Their compensation is tied to the number of tasks they can complete within a given time frame. Lower TAT means they can handle multiple tasks in a day and increase their earnings.

for Business

Average TAT is a metric that we use to evaluate the performance and efficiency of IGs. A lower TAT indicates better performance.

Problem Understanding

Typical Course Structure

Sample course timeline - BIM Professional Course for Architects (6 Months)

A total of 120 Tasks learners have to do in a period of ~6 months, this includes submitting a file, sharing a cloud link etc.

Each task is created by a team of Lead Mentors and Course development team, which is then uploaded on the platform by Operation teams. After it gets uploaded it gets visible to learner and then they can submit the task to IG for assessment.

A Typical journey of a task on learner end looks like this

Who is an Industry Guide?

Industry Guides (IGs) are experienced professionals with 4-5 years of industry expertise. In addition to their primary job, they work part-time with us to assist in various course operations such as assessing tasks, addressing learner inquiries, and supporting learners in their coursework.

After a learner submits tasks, it goes to IG for assessment and then it starts like this.

Pain Points

Learner Pain Points

The old 5-point feedback scale (Bad to Good) had three key issues:

Not Actionable – A high score didn’t explain how to improve.

Inconsistent Ratings – IGs had different interpretations, leading to varied results.

Lack of Guidance – Feedback lacked clarity for next steps.

IG Pain Points

While functional for an MVP, the previous system had major flaws:

No Submission Alerts – IGs often missed timely task updates.

No Deadline Visibility – Without deadlines, prioritization suffered.

Time-Consuming – Rating multiple parameters took ~8 minutes per task.

Low Motivation – Repetitive tasks without urgency led to procrastination.

Ops Pain Points

Though behind the scenes, Ops faced critical challenges:

No Backup Plan – IG dropouts disrupted workflows.

Manual Tracking – Caused inconsistencies in IG compensation.

High Support Load – Standardisation gaps triggered frequent learner queries.

Current UI Audit

UI Issues with Existing Designs

Previously, the system was using the old design. However, the entire product line has been updated to the new design language, transitioning from Oneistox to Novatr.

There's a lack of context when an Instructor or Grader (IG) accesses the assessment sheet. They are unable to see the learner's name or file, which is crucial for maintaining awareness while assessing a task.

The presence of nested sidesheets is causing users to lose track of their workflow, become confused, and require more cognitive effort to manage them.

How might we motivate users to prioritise early assessments while discouraging procrastination?

How might we ensure users receive timely notifications to stay informed?

How might we create a feedback rubric that maximizes efficiency and provides actionable feedback?

How might we enhance the user experience to seamlessly integrate changes while adhering to UI best practices?

Solution Ideation

With clear problem statements and objectives in place we started working towards the solutions, starting from system design and then creating a useful interface design.

Designing a time based incentivised journey

💰 How IG will get paid?

If IG completes the evaluation, he will receive E = INR 150 or $ 2

If IG completes evaluation within X = 4 hours of submission received, he will earn E + E1, where E1 = 25% of E (in INR) or 33.5% of E (in $)

If IG requests the task for resubmission, he will not earn anything

The 24 hour window can be changed from course to course, according to course requirements.

Subsequent improvements:

30-40% of IGs complained sudden transfer of task right when they started assessing it, later it was found that these IGs started evaluating the task right before deadline, so to accommodate this unavoidable behaviour, a buffer of 15 minutes was introduced in the timeline before Transfer phase.

⏰ Timely Reminders

IG will be notified immediately after task is submitted by the learner

IG will get X (= 24 hrs) hours to start assessing the task

At X - Y (Y = 12hrs) hours IG will get reminder if task assessment is not started

If IG has not started to assess the task within X hours of task submission, the task will be forwarded to another available IG

Making Feedback Actionable and Efficient

Collaborating with the Course Development team, propose a two-point feedback method that enhances evaluation and communication with learners. This approach is backed by educational research and studies.

Information Architecture of tasks and their details (Simplified)

Creating wireflows to map out end to end seamless journey

After completing the wireflows it gave us 2 main types of 🖥️ Screens

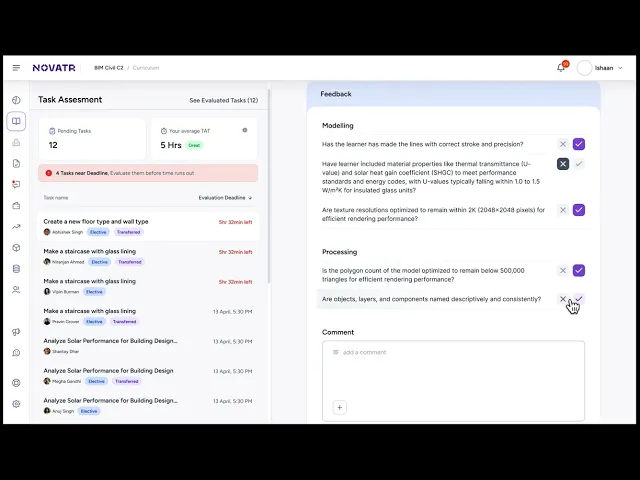

Task Listing

This contains the list of tasks that an IG has to evaluate, along with list of tasks it also contains insight about how many tasks are remaining and helpful tips to improve their performance

Evaluation Desk

This page contains the Assessment sheet to be filled by an IG. This also contains the submitted file and instruction of task.

Wireframes

1. Task Listing

Tasks in this page are ordered in the time remaining to assess, this puts the most urgent task on top of list.

2. Evaluation Desk (2 Explorations)

This page help IG to fill the assessment sheet provided by Course Dev team. In this isolated view and they can assess all the pending tasks in one go.

These two variants were shown internally and tested with actual users giving us helpful feedback and helped us move quickly, key learnings are mentioned below

Variant 1

Variant 2

Pros

Isolated screen for a single task - assessment

Efficient and clean layout

Pros

Can switch between tasks to pick according to their likes

Saved vertical real estate because of one single header

Cons

Hidden Navigational elements

IG do not frequently need learner instructions when assesseing a task because submission of same tasks come together in batches.

Cannot switch between tasks

Cons

Hidden Navigational elements

IG do not frequently need learner instructions when assessing a task because submission of same tasks come together in batches.

Extra elements on the page

With these learnings, we created a UI with best parts from both ideas, shown in the following section.

Final UI

Prototype Video

Ui Screenshots

Hi-Fidelity / Task list page + Feedback Rubric

Hi-Fidelity / Resubmit Task

Hi-Fidelity / Evaluated Task

Components

Component / Task cards

Component / Checklist Items

Component / Submission Card with nested instance of Link/File type based submission

Impact

System Wide Improvements

80% TAT ↓

24 hrs -> 4 hrs

3 Tickets/day ↓

from 11 tickets/day

Now Industry guides can help learner faster which resulted in better learner CSAT (3.8 -> 4.) and a highest ever learner scores 98%.

Reflections & Next Steps

Simple ideas like visibility about the time remaining can bring a lot of behavior change

Collaboration with course team helped us improve feedback rubric making easier to give and consume feedback